Compare commits

1 Commits

contributo

...

WIP/extra-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c1daa4799c |

31

.env

@@ -2,26 +2,11 @@

|

||||

# Be sure to uncomment any line you populate

|

||||

# Everything is optional, but some features won't work without external API access

|

||||

|

||||

# API Keys for external services (backend)

|

||||

GOOGLE_CLOUD_API_KEY=''

|

||||

TORRENT_IP_API_KEY=''

|

||||

SECURITY_TRAILS_API_KEY=''

|

||||

BUILT_WITH_API_KEY=''

|

||||

URL_SCAN_API_KEY=''

|

||||

TRANCO_USERNAME=''

|

||||

TRANCO_API_KEY=''

|

||||

CLOUDMERSIVE_API_KEY=''

|

||||

|

||||

# API Keys for external services (frontend)

|

||||

REACT_APP_SHODAN_API_KEY=''

|

||||

REACT_APP_WHO_API_KEY=''

|

||||

|

||||

# Configuration settings

|

||||

# CHROME_PATH='/usr/bin/chromium' # The path the the Chromium executable

|

||||

# PORT='3000' # Port to serve the API, when running server.js

|

||||

# DISABLE_GUI='false' # Disable the GUI, and only serve the API

|

||||

# API_TIMEOUT_LIMIT='10000' # The timeout limit for API requests, in milliseconds

|

||||

# API_CORS_ORIGIN='*' # Enable CORS, by setting your allowed hostname(s) here

|

||||

# API_ENABLE_RATE_LIMIT='true' # Enable rate limiting for the API

|

||||

# REACT_APP_API_ENDPOINT='/api' # The endpoint for the API (can be local or remote)

|

||||

# ENABLE_ANALYTICS='false' # Enable Plausible hit counter for the frontend

|

||||

# GOOGLE_CLOUD_API_KEY=''

|

||||

# SHODAN_API_KEY=''

|

||||

# REACT_APP_SHODAN_API_KEY=''

|

||||

# WHO_API_KEY=''

|

||||

# REACT_APP_WHO_API_KEY=''

|

||||

# SECURITY_TRAILS_API_KEY=''

|

||||

# BUILT_WITH_API_KEY=''

|

||||

# CI=false

|

||||

|

||||

1176

.github/README.md

vendored

1

.github/screenshots/README.md

vendored

@@ -1 +0,0 @@

|

||||

|

||||

|

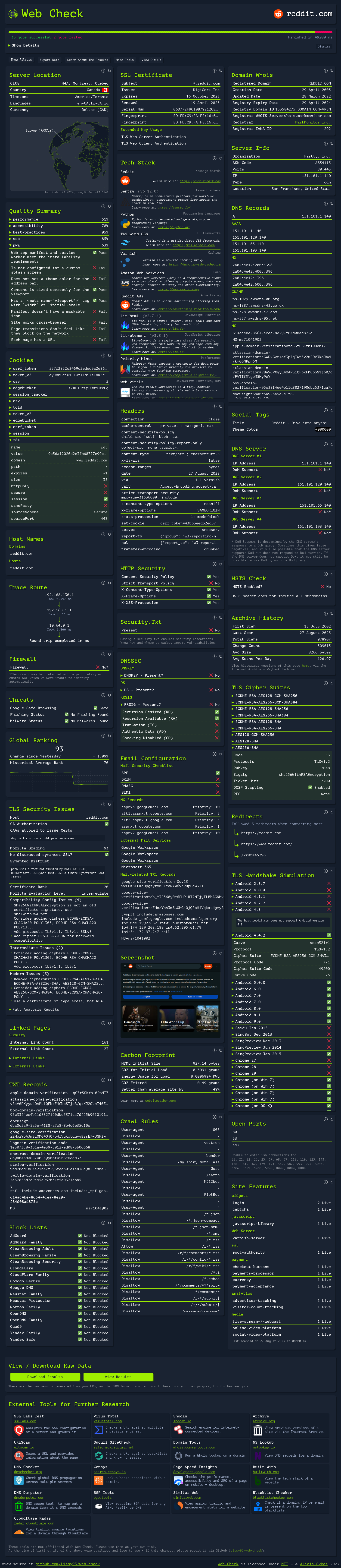

Before Width: | Height: | Size: 2.0 MiB After Width: | Height: | Size: 2.0 MiB |

BIN

.github/screenshots/tiles/archives.png

vendored

|

Before Width: | Height: | Size: 40 KiB |

BIN

.github/screenshots/tiles/block-lists.png

vendored

|

Before Width: | Height: | Size: 95 KiB |

BIN

.github/screenshots/tiles/carbon.png

vendored

|

Before Width: | Height: | Size: 40 KiB |

BIN

.github/screenshots/tiles/dns-server.png

vendored

|

Before Width: | Height: | Size: 40 KiB |

BIN

.github/screenshots/tiles/email-config.png

vendored

|

Before Width: | Height: | Size: 67 KiB |

BIN

.github/screenshots/tiles/firewall.png

vendored

|

Before Width: | Height: | Size: 12 KiB |

BIN

.github/screenshots/tiles/hsts.png

vendored

|

Before Width: | Height: | Size: 27 KiB |

BIN

.github/screenshots/tiles/http-security.png

vendored

|

Before Width: | Height: | Size: 37 KiB |

BIN

.github/screenshots/tiles/linked-pages.png

vendored

|

Before Width: | Height: | Size: 64 KiB |

BIN

.github/screenshots/tiles/ranking.png

vendored

|

Before Width: | Height: | Size: 26 KiB |

BIN

.github/screenshots/tiles/screenshot.png

vendored

|

Before Width: | Height: | Size: 79 KiB |

BIN

.github/screenshots/tiles/security-txt.png

vendored

|

Before Width: | Height: | Size: 63 KiB |

BIN

.github/screenshots/tiles/sitemap.png

vendored

|

Before Width: | Height: | Size: 40 KiB |

BIN

.github/screenshots/tiles/social-tags.png

vendored

|

Before Width: | Height: | Size: 207 KiB |

BIN

.github/screenshots/tiles/tech-stack.png

vendored

|

Before Width: | Height: | Size: 146 KiB |

BIN

.github/screenshots/tiles/threats.png

vendored

|

Before Width: | Height: | Size: 26 KiB |

BIN

.github/screenshots/tiles/tls-cipher-suites.png

vendored

|

Before Width: | Height: | Size: 64 KiB |

|

Before Width: | Height: | Size: 90 KiB |

BIN

.github/screenshots/tiles/tls-security-config.png

vendored

|

Before Width: | Height: | Size: 127 KiB |

BIN

.github/screenshots/wc_carbon.png

vendored

Normal file

|

After Width: | Height: | Size: 31 KiB |

|

Before Width: | Height: | Size: 35 KiB After Width: | Height: | Size: 35 KiB |

|

Before Width: | Height: | Size: 53 KiB After Width: | Height: | Size: 53 KiB |

BIN

.github/screenshots/wc_dnssec-2.png

vendored

Normal file

|

After Width: | Height: | Size: 46 KiB |

|

Before Width: | Height: | Size: 165 KiB After Width: | Height: | Size: 165 KiB |

|

Before Width: | Height: | Size: 44 KiB After Width: | Height: | Size: 44 KiB |

BIN

.github/screenshots/wc_features-2.png

vendored

Normal file

|

After Width: | Height: | Size: 132 KiB |

|

Before Width: | Height: | Size: 73 KiB After Width: | Height: | Size: 73 KiB |

|

Before Width: | Height: | Size: 105 KiB After Width: | Height: | Size: 105 KiB |

|

Before Width: | Height: | Size: 24 KiB After Width: | Height: | Size: 24 KiB |

|

Before Width: | Height: | Size: 94 KiB After Width: | Height: | Size: 94 KiB |

|

Before Width: | Height: | Size: 15 KiB After Width: | Height: | Size: 15 KiB |

|

Before Width: | Height: | Size: 158 KiB After Width: | Height: | Size: 158 KiB |

|

Before Width: | Height: | Size: 23 KiB After Width: | Height: | Size: 23 KiB |

|

Before Width: | Height: | Size: 114 KiB After Width: | Height: | Size: 114 KiB |

|

Before Width: | Height: | Size: 28 KiB After Width: | Height: | Size: 28 KiB |

|

Before Width: | Height: | Size: 46 KiB After Width: | Height: | Size: 46 KiB |

|

Before Width: | Height: | Size: 18 KiB After Width: | Height: | Size: 18 KiB |

|

Before Width: | Height: | Size: 54 KiB After Width: | Height: | Size: 54 KiB |

|

Before Width: | Height: | Size: 123 KiB After Width: | Height: | Size: 123 KiB |

BIN

.github/screenshots/web-check-screenshot1.png

vendored

|

Before Width: | Height: | Size: 3.0 MiB |

BIN

.github/screenshots/web-check-screenshot10.png

vendored

|

Before Width: | Height: | Size: 1.4 MiB |

BIN

.github/screenshots/web-check-screenshot2.png

vendored

|

Before Width: | Height: | Size: 1.7 MiB |

BIN

.github/screenshots/web-check-screenshot3.png

vendored

|

Before Width: | Height: | Size: 2.6 MiB |

BIN

.github/screenshots/web-check-screenshot4.png

vendored

|

Before Width: | Height: | Size: 810 KiB |

37

.github/workflows/credits.yml

vendored

@@ -1,37 +0,0 @@

|

||||

# Inserts list of community members into ./README.md

|

||||

name: 💓 Inserts Contributors & Sponsors

|

||||

on:

|

||||

workflow_dispatch: # Manual dispatch

|

||||

schedule:

|

||||

- cron: '45 1 * * 0' # At 01:45 on Sunday.

|

||||

|

||||

jobs:

|

||||

# Job #1 - Fetches sponsors and inserts table into readme

|

||||

insert-sponsors:

|

||||

runs-on: ubuntu-latest

|

||||

name: Inserts Sponsors 💓

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

- name: Updates readme with sponsors

|

||||

uses: JamesIves/github-sponsors-readme-action@v1

|

||||

with:

|

||||

token: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

file: .github/README.md

|

||||

|

||||

# Job #2 - Fetches contributors and inserts table into readme

|

||||

insert-contributors:

|

||||

runs-on: ubuntu-latest

|

||||

name: Inserts Contributors 💓

|

||||

steps:

|

||||

- name: Updates readme with contributors

|

||||

uses: akhilmhdh/contributors-readme-action@v2.3.10

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

image_size: 80

|

||||

readme_path: .github/README.md

|

||||

columns_per_row: 6

|

||||

commit_message: 'docs: Updates contributors list'

|

||||

committer_username: liss-bot

|

||||

committer_email: liss-bot@d0h.co

|

||||

128

.github/workflows/deploy-aws.yml

vendored

@@ -1,128 +0,0 @@

|

||||

name: 🚀 Deploy to AWS

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- master

|

||||

tags:

|

||||

- '*'

|

||||

paths:

|

||||

- api/**

|

||||

- serverless.yml

|

||||

- package.json

|

||||

- .github/workflows/deploy-aws.yml

|

||||

|

||||

jobs:

|

||||

deploy-api:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Setup Node.js

|

||||

uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: 16

|

||||

|

||||

- name: Cache node_modules

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: node_modules

|

||||

key: ${{ runner.os }}-yarn-${{ hashFiles('**/yarn.lock') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-yarn-

|

||||

|

||||

- name: Create GitHub deployment for API

|

||||

uses: chrnorm/deployment-action@releases/v2

|

||||

id: deployment_api

|

||||

with:

|

||||

token: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

environment: AWS (Backend API)

|

||||

ref: ${{ github.ref }}

|

||||

|

||||

- name: Install Serverless CLI and dependencies

|

||||

run: |

|

||||

npm i -g serverless

|

||||

yarn

|

||||

|

||||

- name: Deploy to AWS

|

||||

env:

|

||||

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

|

||||

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

|

||||

AWS_ACCOUNT_ID: ${{ secrets.AWS_ACCOUNT_ID }}

|

||||

run: serverless deploy

|

||||

|

||||

- name: Update GitHub deployment status (API)

|

||||

if: always()

|

||||

uses: chrnorm/deployment-status@v2

|

||||

with:

|

||||

token: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

state: "${{ job.status }}"

|

||||

deployment_id: ${{ steps.deployment_api.outputs.deployment_id }}

|

||||

ref: ${{ github.ref }}

|

||||

|

||||

deploy-frontend:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Setup Node.js

|

||||

uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: 16

|

||||

|

||||

- name: Cache node_modules

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: node_modules

|

||||

key: ${{ runner.os }}-yarn-${{ hashFiles('**/yarn.lock') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-yarn-

|

||||

|

||||

- name: Create GitHub deployment for Frontend

|

||||

uses: chrnorm/deployment-action@v2

|

||||

id: deployment_frontend

|

||||

with:

|

||||

token: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

environment: AWS (Frontend Web UI)

|

||||

ref: ${{ github.ref }}

|

||||

|

||||

- name: Install dependencies and build

|

||||

run: |

|

||||

yarn install

|

||||

yarn build

|

||||

|

||||

- name: Setup AWS

|

||||

uses: aws-actions/configure-aws-credentials@v4

|

||||

with:

|

||||

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

|

||||

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

|

||||

aws-region: us-east-1

|

||||

|

||||

- name: Upload to S3

|

||||

env:

|

||||

AWS_S3_BUCKET: 'web-check-frontend'

|

||||

run: aws s3 sync ./build/ s3://$AWS_S3_BUCKET/ --delete

|

||||

|

||||

- name: Invalidate CloudFront cache

|

||||

uses: chetan/invalidate-cloudfront-action@v2

|

||||

env:

|

||||

DISTRIBUTION: E30XKAM2TG9FD8

|

||||

PATHS: '/*'

|

||||

AWS_REGION: 'us-east-1'

|

||||

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

|

||||

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

|

||||

|

||||

- name: Update GitHub deployment status (Frontend)

|

||||

if: always()

|

||||

uses: chrnorm/deployment-status@v2

|

||||

with:

|

||||

token: ${{ secrets.BOT_TOKEN || secrets.GITHUB_TOKEN }}

|

||||

state: "${{ job.status }}"

|

||||

deployment_id: ${{ steps.deployment_frontend.outputs.deployment_id }}

|

||||

ref: ${{ github.ref }}

|

||||

|

||||

18

.github/workflows/docker.yml

vendored

@@ -23,14 +23,14 @@ jobs:

|

||||

docker:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout 🛎️

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Extract tag name 🏷️

|

||||

- name: Extract tag name

|

||||

shell: bash

|

||||

run: echo "GIT_TAG=$(echo ${GITHUB_REF#refs/tags/} | sed 's/\//_/g')" >> $GITHUB_ENV

|

||||

|

||||

- name: Compute tags 🔖

|

||||

- name: Compute tags

|

||||

id: compute-tags

|

||||

run: |

|

||||

if [[ "${{ github.ref }}" == "refs/heads/master" ]]; then

|

||||

@@ -41,33 +41,33 @@ jobs:

|

||||

echo "DOCKERHUB_TAG=${DOCKERHUB_REGISTRY}/${DOCKER_USER}/${IMAGE_NAME}:${GIT_TAG}" >> $GITHUB_ENV

|

||||

fi

|

||||

|

||||

- name: Set up QEMU 🐧

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v1

|

||||

|

||||

- name: Set up Docker Buildx 🐳

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

|

||||

- name: Login to GitHub Container Registry 🔑

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v1

|

||||

with:

|

||||

registry: ${{ env.GHCR_REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Login to DockerHub 🔑

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v1

|

||||

with:

|

||||

registry: ${{ env.DOCKERHUB_REGISTRY }}

|

||||

username: ${{ env.DOCKER_USER }}

|

||||

password: ${{ secrets.DOCKERHUB_PASSWORD }}

|

||||

|

||||

- name: Build and push Docker images 🛠️

|

||||

- name: Build and push Docker images

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

context: .

|

||||

file: ./Dockerfile

|

||||

push: true

|

||||

platforms: linux/amd64,linux/arm64/v8

|

||||

platforms: linux/amd64

|

||||

tags: |

|

||||

${{ env.GHCR_TAG }}

|

||||

${{ env.DOCKERHUB_TAG }}

|

||||

|

||||

2

.github/workflows/mirror.yml

vendored

@@ -8,7 +8,7 @@ jobs:

|

||||

codeberg:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v2

|

||||

with: { fetch-depth: 0 }

|

||||

- uses: pixta-dev/repository-mirroring-action@v1

|

||||

with:

|

||||

|

||||

72

.gitignore

vendored

@@ -1,62 +1,28 @@

|

||||

# ------------------------

|

||||

# ENVIRONMENT SETTINGS

|

||||

# ------------------------

|

||||

|

||||

# Keys

|

||||

.env

|

||||

|

||||

# ------------------------

|

||||

# PRODUCTION

|

||||

# ------------------------

|

||||

/build/

|

||||

# dependencies

|

||||

/node_modules

|

||||

/.pnp

|

||||

.pnp.js

|

||||

|

||||

# ------------------------

|

||||

# BUILT FILES

|

||||

# ------------------------

|

||||

dist/

|

||||

.vercel/

|

||||

.netlify/

|

||||

.webpack/

|

||||

.serverless/

|

||||

.astro/

|

||||

# testing

|

||||

/coverage

|

||||

|

||||

# ------------------------

|

||||

# DEPENDENCIES

|

||||

# ------------------------

|

||||

node_modules/

|

||||

.yarn/cache/

|

||||

.yarn/unplugged/

|

||||

.yarn/build-state.yml

|

||||

.yarn/install-state.gz

|

||||

.pnpm/

|

||||

.pnp.*

|

||||

# production

|

||||

/build

|

||||

|

||||

# misc

|

||||

.DS_Store

|

||||

.env.local

|

||||

.env.development.local

|

||||

.env.test.local

|

||||

.env.production.local

|

||||

|

||||

# ------------------------

|

||||

# LOGS

|

||||

# ------------------------

|

||||

logs/

|

||||

*.log

|

||||

npm-debug.log*

|

||||

yarn-debug.log*

|

||||

yarn-error.log*

|

||||

lerna-debug.log*

|

||||

.pnpm-debug.log*

|

||||

|

||||

# ------------------------

|

||||

# TESTING

|

||||

# ------------------------

|

||||

coverage/

|

||||

.nyc_output/

|

||||

|

||||

# ------------------------

|

||||

# OS SPECIFIC

|

||||

# ------------------------

|

||||

.DS_Store

|

||||

Thumbs.db

|

||||

|

||||

# ------------------------

|

||||

# EDITORS

|

||||

# ------------------------

|

||||

.idea/

|

||||

.vscode/

|

||||

*.swp

|

||||

*.swo

|

||||

|

||||

# Local Netlify folder

|

||||

.netlify

|

||||

|

||||

64

Dockerfile

@@ -1,62 +1,12 @@

|

||||

# Specify the Node.js version to use

|

||||

ARG NODE_VERSION=21

|

||||

|

||||

# Specify the Debian version to use, the default is "bullseye"

|

||||

ARG DEBIAN_VERSION=bullseye

|

||||

|

||||

# Use Node.js Docker image as the base image, with specific Node and Debian versions

|

||||

FROM node:${NODE_VERSION}-${DEBIAN_VERSION} AS build

|

||||

|

||||

# Set the container's default shell to Bash and enable some options

|

||||

SHELL ["/bin/bash", "-euo", "pipefail", "-c"]

|

||||

|

||||

# Install Chromium browser and Download and verify Google Chrome’s signing key

|

||||

RUN apt-get update -qq --fix-missing && \

|

||||

apt-get -qqy install --allow-unauthenticated gnupg wget && \

|

||||

wget --quiet --output-document=- https://dl-ssl.google.com/linux/linux_signing_key.pub | gpg --dearmor > /etc/apt/trusted.gpg.d/google-archive.gpg && \

|

||||

echo "deb [arch=amd64] http://dl.google.com/linux/chrome/deb/ stable main" > /etc/apt/sources.list.d/google.list && \

|

||||

apt-get update -qq && \

|

||||

apt-get -qqy --no-install-recommends install chromium traceroute python make g++ && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Run the Chromium browser's version command and redirect its output to the /etc/chromium-version file

|

||||

RUN /usr/bin/chromium --no-sandbox --version > /etc/chromium-version

|

||||

|

||||

# Set the working directory to /app

|

||||

FROM node:16-buster-slim AS base

|

||||

WORKDIR /app

|

||||

|

||||

# Copy package.json and yarn.lock to the working directory

|

||||

COPY package.json yarn.lock ./

|

||||

|

||||

# Run yarn install to install dependencies and clear yarn cache

|

||||

RUN apt-get update && \

|

||||

yarn install --frozen-lockfile --network-timeout 100000 && \

|

||||

rm -rf /app/node_modules/.cache

|

||||

|

||||

# Copy all files to working directory

|

||||

FROM base AS builder

|

||||

COPY . .

|

||||

|

||||

# Run yarn build to build the application

|

||||

RUN yarn build --production

|

||||

|

||||

# Final stage

|

||||

FROM node:${NODE_VERSION}-${DEBIAN_VERSION} AS final

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY package.json yarn.lock ./

|

||||

COPY --from=build /app .

|

||||

|

||||

RUN apt-get update && \

|

||||

apt-get install -y --no-install-recommends chromium traceroute && \

|

||||

apt-get install -y chromium traceroute && \

|

||||

chmod 755 /usr/bin/chromium && \

|

||||

rm -rf /var/lib/apt/lists/* /app/node_modules/.cache

|

||||

|

||||

# Exposed container port, the default is 3000, which can be modified through the environment variable PORT

|

||||

EXPOSE ${PORT:-3000}

|

||||

|

||||

# Set the environment variable CHROME_PATH to specify the path to the Chromium binaries

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

RUN npm install --force

|

||||

EXPOSE 8888

|

||||

ENV CHROME_PATH='/usr/bin/chromium'

|

||||

|

||||

# Define the command executed when the container starts and start the server.js of the Node.js application

|

||||

CMD ["yarn", "start"]

|

||||

CMD ["npm", "run", "serve"]

|

||||

|

||||

@@ -1,51 +0,0 @@

|

||||

const path = require('path');

|

||||

const nodeExternals = require('webpack-node-externals');

|

||||

|

||||

module.exports = {

|

||||

target: 'node',

|

||||

mode: 'production',

|

||||

entry: {

|

||||

'carbon': './api/carbon.js',

|

||||

'cookies': './api/cookies.js',

|

||||

'dns-server': './api/dns-server.js',

|

||||

'dns': './api/dns.js',

|

||||

'dnssec': './api/dnssec.js',

|

||||

'features': './api/features.js',

|

||||

'get-ip': './api/get-ip.js',

|

||||

'headers': './api/headers.js',

|

||||

'hsts': './api/hsts.js',

|

||||

'linked-pages': './api/linked-pages.js',

|

||||

'mail-config': './api/mail-config.js',

|

||||

'ports': './api/ports.js',

|

||||

'quality': './api/quality.js',

|

||||

'redirects': './api/redirects.js',

|

||||

'robots-txt': './api/robots-txt.js',

|

||||

'screenshot': './api/screenshot.js',

|

||||

'security-txt': './api/security-txt.js',

|

||||

'sitemap': './api/sitemap.js',

|

||||

'social-tags': './api/social-tags.js',

|

||||

'ssl': './api/ssl.js',

|

||||

'status': './api/status.js',

|

||||

'tech-stack': './api/tech-stack.js',

|

||||

'trace-route': './api/trace-route.js',

|

||||

'txt-records': './api/txt-records.js',

|

||||

'whois': './api/whois.js',

|

||||

},

|

||||

externals: [nodeExternals()],

|

||||

output: {

|

||||

filename: '[name].js',

|

||||

path: path.resolve(__dirname, '.webpack'),

|

||||

libraryTarget: 'commonjs2'

|

||||

},

|

||||

module: {

|

||||

rules: [

|

||||

{

|

||||

test: /\.js$/,

|

||||

use: {

|

||||

loader: 'babel-loader'

|

||||

},

|

||||

exclude: /node_modules/,

|

||||

}

|

||||

]

|

||||

}

|

||||

};

|

||||

@@ -1,131 +0,0 @@

|

||||

const normalizeUrl = (url) => {

|

||||

return url.startsWith('http') ? url : `https://${url}`;

|

||||

};

|

||||

|

||||

// If present, set a shorter timeout for API requests

|

||||

const TIMEOUT = process.env.API_TIMEOUT_LIMIT ? parseInt(process.env.API_TIMEOUT_LIMIT, 10) : 60000;

|

||||

|

||||

// If present, set CORS allowed origins for responses

|

||||

const ALLOWED_ORIGINS = process.env.API_CORS_ORIGIN || '*';

|

||||

|

||||

// Set the platform currently being used

|

||||

let PLATFORM = 'NETLIFY';

|

||||

if (process.env.PLATFORM) { PLATFORM = process.env.PLATFORM.toUpperCase(); }

|

||||

else if (process.env.VERCEL) { PLATFORM = 'VERCEL'; }

|

||||

else if (process.env.WC_SERVER) { PLATFORM = 'NODE'; }

|

||||

|

||||

// Define the headers to be returned with each response

|

||||

const headers = {

|

||||

'Access-Control-Allow-Origin': ALLOWED_ORIGINS,

|

||||

'Access-Control-Allow-Credentials': true,

|

||||

'Content-Type': 'application/json;charset=UTF-8',

|

||||

};

|

||||

|

||||

|

||||

const timeoutErrorMsg = 'You can re-trigger this request, by clicking "Retry"\n'

|

||||

+ 'If you\'re running your own instance of Web Check, then you can '

|

||||

+ 'resolve this issue, by increasing the timeout limit in the '

|

||||

+ '`API_TIMEOUT_LIMIT` environmental variable to a higher value (in milliseconds), '

|

||||

+ 'or if you\'re hosting on Vercel increase the maxDuration in vercel.json.\n\n'

|

||||

+ `The public instance currently has a lower timeout of ${TIMEOUT}ms `

|

||||

+ 'in order to keep running costs affordable, so that Web Check can '

|

||||

+ 'remain freely available for everyone.';

|

||||

|

||||

// A middleware function used by all API routes on all platforms

|

||||

const commonMiddleware = (handler) => {

|

||||

|

||||

// Create a timeout promise, to throw an error if a request takes too long

|

||||

const createTimeoutPromise = (timeoutMs) => {

|

||||

return new Promise((_, reject) => {

|

||||

setTimeout(() => {

|

||||

reject(new Error(`Request timed-out after ${timeoutMs} ms`));

|

||||

}, timeoutMs);

|

||||

});

|

||||

};

|

||||

|

||||

// Vercel

|

||||

const vercelHandler = async (request, response) => {

|

||||

const queryParams = request.query || {};

|

||||

const rawUrl = queryParams.url;

|

||||

|

||||

if (!rawUrl) {

|

||||

return response.status(500).json({ error: 'No URL specified' });

|

||||

}

|

||||

|

||||

const url = normalizeUrl(rawUrl);

|

||||

|

||||

try {

|

||||

// Race the handler against the timeout

|

||||

const handlerResponse = await Promise.race([

|

||||

handler(url, request),

|

||||

createTimeoutPromise(TIMEOUT)

|

||||

]);

|

||||

|

||||

if (handlerResponse.body && handlerResponse.statusCode) {

|

||||

response.status(handlerResponse.statusCode).json(handlerResponse.body);

|

||||

} else {

|

||||

response.status(200).json(

|

||||

typeof handlerResponse === 'object' ? handlerResponse : JSON.parse(handlerResponse)

|

||||

);

|

||||

}

|

||||

} catch (error) {

|

||||

let errorCode = 500;

|

||||

if (error.message.includes('timed-out') || response.statusCode === 504) {

|

||||

errorCode = 408;

|

||||

error.message = `${error.message}\n\n${timeoutErrorMsg}`;

|

||||

}

|

||||

response.status(errorCode).json({ error: error.message });

|

||||

}

|

||||

};

|

||||

|

||||

// Netlify

|

||||

const netlifyHandler = async (event, context, callback) => {

|

||||

const queryParams = event.queryStringParameters || event.query || {};

|

||||

const rawUrl = queryParams.url;

|

||||

|

||||

if (!rawUrl) {

|

||||

callback(null, {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({ error: 'No URL specified' }),

|

||||

headers,

|

||||

});

|

||||

return;

|

||||

}

|

||||

|

||||

const url = normalizeUrl(rawUrl);

|

||||

|

||||

try {

|

||||

// Race the handler against the timeout

|

||||

const handlerResponse = await Promise.race([

|

||||

handler(url, event, context),

|

||||

createTimeoutPromise(TIMEOUT)

|

||||

]);

|

||||

|

||||

if (handlerResponse.body && handlerResponse.statusCode) {

|

||||

callback(null, handlerResponse);

|

||||

} else {

|

||||

callback(null, {

|

||||

statusCode: 200,

|

||||

body: typeof handlerResponse === 'object' ? JSON.stringify(handlerResponse) : handlerResponse,

|

||||

headers,

|

||||

});

|

||||

}

|

||||

} catch (error) {

|

||||

callback(null, {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({ error: error.message }),

|

||||

headers,

|

||||

});

|

||||

}

|

||||

};

|

||||

|

||||

// The format of the handlers varies between platforms

|

||||

const nativeMode = (['VERCEL', 'NODE'].includes(PLATFORM));

|

||||

return nativeMode ? vercelHandler : netlifyHandler;

|

||||

};

|

||||

|

||||

if (PLATFORM === 'NETLIFY') {

|

||||

module.exports = commonMiddleware;

|

||||

}

|

||||

|

||||

export default commonMiddleware;

|

||||

@@ -1,84 +0,0 @@

|

||||

import axios from 'axios';

|

||||

import middleware from './_common/middleware.js';

|

||||

|

||||

const convertTimestampToDate = (timestamp) => {

|

||||

const [year, month, day, hour, minute, second] = [

|

||||

timestamp.slice(0, 4),

|

||||

timestamp.slice(4, 6) - 1,

|

||||

timestamp.slice(6, 8),

|

||||

timestamp.slice(8, 10),

|

||||

timestamp.slice(10, 12),

|

||||

timestamp.slice(12, 14),

|

||||

].map(num => parseInt(num, 10));

|

||||

|

||||

return new Date(year, month, day, hour, minute, second);

|

||||

}

|

||||

|

||||

const countPageChanges = (results) => {

|

||||

let prevDigest = null;

|

||||

return results.reduce((acc, curr) => {

|

||||

if (curr[2] !== prevDigest) {

|

||||

prevDigest = curr[2];

|

||||

return acc + 1;

|

||||

}

|

||||

return acc;

|

||||

}, -1);

|

||||

}

|

||||

|

||||

const getAveragePageSize = (scans) => {

|

||||

const totalSize = scans.map(scan => parseInt(scan[3], 10)).reduce((sum, size) => sum + size, 0);

|

||||

return Math.round(totalSize / scans.length);

|

||||

};

|

||||

|

||||

const getScanFrequency = (firstScan, lastScan, totalScans, changeCount) => {

|

||||

const formatToTwoDecimal = num => parseFloat(num.toFixed(2));

|

||||

|

||||

const dayFactor = (lastScan - firstScan) / (1000 * 60 * 60 * 24);

|

||||

const daysBetweenScans = formatToTwoDecimal(dayFactor / totalScans);

|

||||

const daysBetweenChanges = formatToTwoDecimal(dayFactor / changeCount);

|

||||

const scansPerDay = formatToTwoDecimal((totalScans - 1) / dayFactor);

|

||||

const changesPerDay = formatToTwoDecimal(changeCount / dayFactor);

|

||||

return {

|

||||

daysBetweenScans,

|

||||

daysBetweenChanges,

|

||||

scansPerDay,

|

||||

changesPerDay,

|

||||

};

|

||||

};

|

||||

|

||||

const wayBackHandler = async (url) => {

|

||||

const cdxUrl = `https://web.archive.org/cdx/search/cdx?url=${url}&output=json&fl=timestamp,statuscode,digest,length,offset`;

|

||||

|

||||

try {

|

||||

const { data } = await axios.get(cdxUrl);

|

||||

|

||||

// Check there's data

|

||||

if (!data || !Array.isArray(data) || data.length <= 1) {

|

||||

return { skipped: 'Site has never before been archived via the Wayback Machine' };

|

||||

}

|

||||

|

||||

// Remove the header row

|

||||

data.shift();

|

||||

|

||||

// Process and return the results

|

||||

const firstScan = convertTimestampToDate(data[0][0]);

|

||||

const lastScan = convertTimestampToDate(data[data.length - 1][0]);

|

||||

const totalScans = data.length;

|

||||

const changeCount = countPageChanges(data);

|

||||

return {

|

||||

firstScan,

|

||||

lastScan,

|

||||

totalScans,

|

||||

changeCount,

|

||||

averagePageSize: getAveragePageSize(data),

|

||||

scanFrequency: getScanFrequency(firstScan, lastScan, totalScans, changeCount),

|

||||

scans: data,

|

||||

scanUrl: url,

|

||||

};

|

||||

} catch (err) {

|

||||

return { error: `Error fetching Wayback data: ${err.message}` };

|

||||

}

|

||||

};

|

||||

|

||||

export const handler = middleware(wayBackHandler);

|

||||

export default handler;

|

||||

@@ -1,105 +0,0 @@

|

||||

import dns from 'dns';

|

||||

import { URL } from 'url';

|

||||

import middleware from './_common/middleware.js';

|

||||

|

||||

const DNS_SERVERS = [

|

||||

{ name: 'AdGuard', ip: '176.103.130.130' },

|

||||

{ name: 'AdGuard Family', ip: '176.103.130.132' },

|

||||

{ name: 'CleanBrowsing Adult', ip: '185.228.168.10' },

|

||||

{ name: 'CleanBrowsing Family', ip: '185.228.168.168' },

|

||||

{ name: 'CleanBrowsing Security', ip: '185.228.168.9' },

|

||||

{ name: 'CloudFlare', ip: '1.1.1.1' },

|

||||

{ name: 'CloudFlare Family', ip: '1.1.1.3' },

|

||||

{ name: 'Comodo Secure', ip: '8.26.56.26' },

|

||||

{ name: 'Google DNS', ip: '8.8.8.8' },

|

||||

{ name: 'Neustar Family', ip: '156.154.70.3' },

|

||||

{ name: 'Neustar Protection', ip: '156.154.70.2' },

|

||||

{ name: 'Norton Family', ip: '199.85.126.20' },

|

||||

{ name: 'OpenDNS', ip: '208.67.222.222' },

|

||||

{ name: 'OpenDNS Family', ip: '208.67.222.123' },

|

||||

{ name: 'Quad9', ip: '9.9.9.9' },

|

||||

{ name: 'Yandex Family', ip: '77.88.8.7' },

|

||||

{ name: 'Yandex Safe', ip: '77.88.8.88' },

|

||||

];

|

||||

const knownBlockIPs = [

|

||||

'146.112.61.106', // OpenDNS

|

||||

'185.228.168.10', // CleanBrowsing

|

||||

'8.26.56.26', // Comodo

|

||||

'9.9.9.9', // Quad9

|

||||

'208.69.38.170', // Some OpenDNS IPs

|

||||

'208.69.39.170', // Some OpenDNS IPs

|

||||

'208.67.222.222', // OpenDNS

|

||||

'208.67.222.123', // OpenDNS FamilyShield

|

||||

'199.85.126.10', // Norton

|

||||

'199.85.126.20', // Norton Family

|

||||

'156.154.70.22', // Neustar

|

||||

'77.88.8.7', // Yandex

|

||||

'77.88.8.8', // Yandex

|

||||

'::1', // Localhost IPv6

|

||||

'2a02:6b8::feed:0ff', // Yandex DNS

|

||||

'2a02:6b8::feed:bad', // Yandex Safe

|

||||

'2a02:6b8::feed:a11', // Yandex Family

|

||||

'2620:119:35::35', // OpenDNS

|

||||

'2620:119:53::53', // OpenDNS FamilyShield

|

||||

'2606:4700:4700::1111', // Cloudflare

|

||||

'2606:4700:4700::1001', // Cloudflare

|

||||

'2001:4860:4860::8888', // Google DNS

|

||||

'2a0d:2a00:1::', // AdGuard

|

||||

'2a0d:2a00:2::' // AdGuard Family

|

||||

];

|

||||

|

||||

const isDomainBlocked = async (domain, serverIP) => {

|

||||

return new Promise((resolve) => {

|

||||

dns.resolve4(domain, { server: serverIP }, (err, addresses) => {

|

||||

if (!err) {

|

||||

if (addresses.some(addr => knownBlockIPs.includes(addr))) {

|

||||

resolve(true);

|

||||

return;

|

||||

}

|

||||

resolve(false);

|

||||

return;

|

||||

}

|

||||

|

||||

dns.resolve6(domain, { server: serverIP }, (err6, addresses6) => {

|

||||

if (!err6) {

|

||||

if (addresses6.some(addr => knownBlockIPs.includes(addr))) {

|

||||

resolve(true);

|

||||

return;

|

||||

}

|

||||

resolve(false);

|

||||

return;

|

||||

}

|

||||

if (err6.code === 'ENOTFOUND' || err6.code === 'SERVFAIL') {

|

||||

resolve(true);

|

||||

} else {

|

||||

resolve(false);

|

||||

}

|

||||

});

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

const checkDomainAgainstDnsServers = async (domain) => {

|

||||

let results = [];

|

||||

|

||||

for (let server of DNS_SERVERS) {

|

||||

const isBlocked = await isDomainBlocked(domain, server.ip);

|

||||

results.push({

|

||||

server: server.name,

|

||||

serverIp: server.ip,

|

||||

isBlocked,

|

||||

});

|

||||

}

|

||||

|

||||

return results;

|

||||

};

|

||||

|

||||

export const blockListHandler = async (url) => {

|

||||

const domain = new URL(url).hostname;

|

||||

const results = await checkDomainAgainstDnsServers(domain);

|

||||

return { blocklists: results };

|

||||

};

|

||||

|

||||

export const handler = middleware(blockListHandler);

|

||||

export default handler;

|

||||

|

||||

@@ -1,20 +1,30 @@

|

||||

import https from 'https';

|

||||

import middleware from './_common/middleware.js';

|

||||

const https = require('https');

|

||||

|

||||

exports.handler = async function(event, context) {

|

||||

const siteURL = event.queryStringParameters.url;

|

||||

|

||||

const hstsHandler = async (url, event, context) => {

|

||||

const errorResponse = (message, statusCode = 500) => {

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ error: message }),

|

||||

};

|

||||

};

|

||||

const hstsIncompatible = (message, compatible = false, hstsHeader = null ) => {

|

||||

return { message, compatible, hstsHeader };

|

||||

const hstsIncompatible = (message, statusCode = 200) => {

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ message, compatible: false }),

|

||||

};

|

||||

};

|

||||

|

||||

if (!siteURL) {

|

||||

return {

|

||||

statusCode: 400,

|

||||

body: JSON.stringify({ error: 'URL parameter is missing!' }),

|

||||

};

|

||||

}

|

||||

|

||||

return new Promise((resolve, reject) => {

|

||||

const req = https.request(url, res => {

|

||||

const req = https.request(siteURL, res => {

|

||||

const headers = res.headers;

|

||||

const hstsHeader = headers['strict-transport-security'];

|

||||

|

||||

@@ -32,7 +42,14 @@ const hstsHandler = async (url, event, context) => {

|

||||

} else if (!preload) {

|

||||

resolve(hstsIncompatible(`HSTS header does not contain the preload directive.`));

|

||||

} else {

|

||||

resolve(hstsIncompatible(`Site is compatible with the HSTS preload list!`, true, hstsHeader));

|

||||

resolve({

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({

|

||||

message: "Site is compatible with the HSTS preload list!",

|

||||

compatible: true,

|

||||

hstsHeader: hstsHeader,

|

||||

}),

|

||||

});

|

||||

}

|

||||

}

|

||||

});

|

||||

@@ -44,6 +61,3 @@ const hstsHandler = async (url, event, context) => {

|

||||

req.end();

|

||||

});

|

||||

};

|

||||

|

||||

export const handler = middleware(hstsHandler);

|

||||

export default handler;

|

||||

@@ -1,5 +1,4 @@

|

||||

import net from 'net';

|

||||

import middleware from './_common/middleware.js';

|

||||

const net = require('net');

|

||||

|

||||

// A list of commonly used ports.

|

||||

const PORTS = [

|

||||

@@ -13,7 +12,7 @@ async function checkPort(port, domain) {

|

||||

return new Promise((resolve, reject) => {

|

||||

const socket = new net.Socket();

|

||||

|

||||

socket.setTimeout(1500);

|

||||

socket.setTimeout(1500); // you may want to adjust the timeout

|

||||

|

||||

socket.once('connect', () => {

|

||||

socket.destroy();

|

||||

@@ -34,8 +33,12 @@ async function checkPort(port, domain) {

|

||||

});

|

||||

}

|

||||

|

||||

const portsHandler = async (url, event, context) => {

|

||||

const domain = url.replace(/(^\w+:|^)\/\//, '');

|

||||

exports.handler = async (event, context) => {

|

||||

const domain = event.queryStringParameters.url;

|

||||

|

||||

if (!domain) {

|

||||

return errorResponse('Missing domain parameter.');

|

||||

}

|

||||

|

||||

const delay = ms => new Promise(res => setTimeout(res, ms));

|

||||

const timeout = delay(9000);

|

||||

@@ -73,16 +76,15 @@ const portsHandler = async (url, event, context) => {

|

||||

return errorResponse('The function timed out before completing.');

|

||||

}

|

||||

|

||||

// Sort openPorts and failedPorts before returning

|

||||

openPorts.sort((a, b) => a - b);

|

||||

failedPorts.sort((a, b) => a - b);

|

||||

|

||||

return { openPorts, failedPorts };

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({ openPorts, failedPorts }),

|

||||

};

|

||||

};

|

||||

|

||||

const errorResponse = (message, statusCode = 444) => {

|

||||

return { error: message };

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ error: message }),

|

||||

};

|

||||

};

|

||||

|

||||

export const handler = middleware(portsHandler);

|

||||

export default handler;

|

||||

60

api/content-links.js

Normal file

@@ -0,0 +1,60 @@

|

||||

const axios = require('axios');

|

||||

const cheerio = require('cheerio');

|

||||

const urlLib = require('url');

|

||||

|

||||

exports.handler = async (event, context) => {

|

||||

let url = event.queryStringParameters.url;

|

||||

|

||||

// Check if url includes protocol

|

||||

if (!url.startsWith('http://') && !url.startsWith('https://')) {

|

||||

url = 'http://' + url;

|

||||

}

|

||||

|

||||

try {

|

||||

const response = await axios.get(url);

|

||||

const html = response.data;

|

||||

const $ = cheerio.load(html);

|

||||

const internalLinksMap = new Map();

|

||||

const externalLinksMap = new Map();

|

||||

|

||||

$('a[href]').each((i, link) => {

|

||||

const href = $(link).attr('href');

|

||||

const absoluteUrl = urlLib.resolve(url, href);

|

||||

|

||||

if (absoluteUrl.startsWith(url)) {

|

||||

const count = internalLinksMap.get(absoluteUrl) || 0;

|

||||

internalLinksMap.set(absoluteUrl, count + 1);

|

||||

} else if (href.startsWith('http://') || href.startsWith('https://')) {

|

||||

const count = externalLinksMap.get(absoluteUrl) || 0;

|

||||

externalLinksMap.set(absoluteUrl, count + 1);

|

||||

}

|

||||

});

|

||||

|

||||

// Convert maps to sorted arrays

|

||||

const internalLinks = [...internalLinksMap.entries()].sort((a, b) => b[1] - a[1]).map(entry => entry[0]);

|

||||

const externalLinks = [...externalLinksMap.entries()].sort((a, b) => b[1] - a[1]).map(entry => entry[0]);

|

||||

|

||||

if (internalLinks.length === 0 && externalLinks.length === 0) {

|

||||

return {

|

||||

statusCode: 400,

|

||||

body: JSON.stringify({

|

||||

skipped: 'No internal or external links found. '

|

||||

+ 'This may be due to the website being dynamically rendered, using a client-side framework (like React), and without SSR enabled. '

|

||||

+ 'That would mean that the static HTML returned from the HTTP request doesn\'t contain any meaningful content for Web-Check to analyze. '

|

||||

+ 'You can rectify this by using a headless browser to render the page instead.',

|

||||

}),

|

||||

};

|

||||

}

|

||||

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({ internal: internalLinks, external: externalLinks }),

|

||||

};

|

||||

} catch (error) {

|

||||

console.log(error);

|

||||

return {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({ error: 'Failed fetching data' }),

|

||||

};

|

||||

}

|

||||

};

|

||||

@@ -1,58 +0,0 @@

|

||||

import axios from 'axios';

|

||||

import puppeteer from 'puppeteer';

|

||||

import middleware from './_common/middleware.js';

|

||||

|

||||

const getPuppeteerCookies = async (url) => {

|

||||

const browser = await puppeteer.launch({

|

||||

headless: 'new',

|

||||

args: ['--no-sandbox', '--disable-setuid-sandbox'],

|

||||

});

|

||||

|

||||

try {

|

||||

const page = await browser.newPage();

|

||||

const navigationPromise = page.goto(url, { waitUntil: 'networkidle2' });

|

||||

const timeoutPromise = new Promise((_, reject) =>

|

||||

setTimeout(() => reject(new Error('Puppeteer took too long!')), 3000)

|

||||

);

|

||||

await Promise.race([navigationPromise, timeoutPromise]);

|

||||

return await page.cookies();

|

||||

} finally {

|

||||

await browser.close();

|

||||

}

|

||||

};

|

||||

|

||||

const cookieHandler = async (url) => {

|

||||

let headerCookies = null;

|

||||

let clientCookies = null;

|

||||

|

||||

try {

|

||||

const response = await axios.get(url, {

|

||||

withCredentials: true,

|

||||

maxRedirects: 5,

|

||||

});

|

||||

headerCookies = response.headers['set-cookie'];

|

||||

} catch (error) {

|

||||

if (error.response) {

|

||||

return { error: `Request failed with status ${error.response.status}: ${error.message}` };

|

||||

} else if (error.request) {

|

||||

return { error: `No response received: ${error.message}` };

|

||||

} else {

|

||||

return { error: `Error setting up request: ${error.message}` };

|

||||

}

|

||||

}

|

||||

|

||||

try {

|

||||

clientCookies = await getPuppeteerCookies(url);

|

||||

} catch (_) {

|

||||

clientCookies = null;

|

||||

}

|

||||

|

||||

if (!headerCookies && (!clientCookies || clientCookies.length === 0)) {

|

||||

return { skipped: 'No cookies' };

|

||||

}

|

||||

|

||||

return { headerCookies, clientCookies };

|

||||

};

|

||||

|

||||

export const handler = middleware(cookieHandler);

|

||||

export default handler;

|

||||

@@ -1,7 +1,16 @@

|

||||

import https from 'https';

|

||||

import middleware from './_common/middleware.js';

|

||||

const https = require('https');

|

||||

|

||||

exports.handler = async function(event, context) {

|

||||

let { url } = event.queryStringParameters;

|

||||

|

||||

if (!url) {

|

||||

return errorResponse('URL query parameter is required.');

|

||||

}

|

||||

|

||||

// Extract hostname from URL

|

||||

const parsedUrl = new URL(url);

|

||||

const domain = parsedUrl.hostname;

|

||||

|

||||

const dnsSecHandler = async (domain) => {

|

||||

const dnsTypes = ['DNSKEY', 'DS', 'RRSIG'];

|

||||

const records = {};

|

||||

|

||||

@@ -25,11 +34,7 @@ const dnsSecHandler = async (domain) => {

|

||||

});

|

||||

|

||||

res.on('end', () => {

|

||||

try {

|

||||

resolve(JSON.parse(data));

|

||||

} catch (error) {

|

||||

reject(new Error('Invalid JSON response'));

|

||||

}

|

||||

});

|

||||

|

||||

res.on('error', error => {

|

||||

@@ -46,12 +51,19 @@ const dnsSecHandler = async (domain) => {

|

||||

records[type] = { isFound: false, answer: null, response: dnsResponse};

|

||||

}

|

||||

} catch (error) {

|

||||

throw new Error(`Error fetching ${type} record: ${error.message}`); // This will be caught and handled by the commonMiddleware

|

||||

return errorResponse(`Error fetching ${type} record: ${error.message}`);

|

||||

}

|

||||

}

|

||||

|

||||

return records;

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify(records),

|

||||

};

|

||||

};

|

||||

|

||||

export const handler = middleware(dnsSecHandler);

|

||||

export default handler;

|

||||

const errorResponse = (message, statusCode = 444) => {

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ error: message }),

|

||||

};

|

||||

};

|

||||

@@ -1,10 +1,11 @@

|

||||

import { promises as dnsPromises, lookup } from 'dns';

|

||||

import axios from 'axios';

|

||||

import middleware from './_common/middleware.js';

|

||||

const dns = require('dns');

|

||||

const dnsPromises = dns.promises;

|

||||

// const https = require('https');

|

||||

const axios = require('axios');

|

||||

|

||||

const dnsHandler = async (url) => {

|

||||

exports.handler = async (event) => {

|

||||

const domain = event.queryStringParameters.url.replace(/^(?:https?:\/\/)?/i, "");

|

||||

try {

|

||||

const domain = url.replace(/^(?:https?:\/\/)?/i, "");

|

||||

const addresses = await dnsPromises.resolve4(domain);

|

||||

const results = await Promise.all(addresses.map(async (address) => {

|

||||

const hostname = await dnsPromises.reverse(address).catch(() => null);

|

||||

@@ -21,7 +22,6 @@ const dnsHandler = async (url) => {

|

||||

dohDirectSupports,

|

||||

};

|

||||

}));

|

||||

|

||||

// let dohMozillaSupport = false;

|

||||

// try {

|

||||

// const mozillaList = await axios.get('https://firefox.settings.services.mozilla.com/v1/buckets/security-state/collections/onecrl/records');

|

||||

@@ -29,18 +29,20 @@ const dnsHandler = async (url) => {

|

||||

// } catch (error) {

|

||||

// console.error(error);

|

||||

// }

|

||||

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({

|

||||

domain,

|

||||

dns: results,

|

||||

// dohMozillaSupport,

|

||||

}),

|

||||

};

|

||||

} catch (error) {

|

||||

throw new Error(`An error occurred while resolving DNS. ${error.message}`); // This will be caught and handled by the commonMiddleware

|

||||

return {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({

|

||||

error: `An error occurred while resolving DNS. ${error.message}`,

|

||||

}),

|

||||

};

|

||||

}

|

||||

};

|

||||

|

||||

|

||||

export const handler = middleware(dnsHandler);

|

||||

export default handler;

|

||||

|

||||

|

||||

33

api/find-url-ip.js

Normal file

@@ -0,0 +1,33 @@

|

||||

const dns = require('dns');

|

||||

|

||||

/* Lambda function to fetch the IP address of a given URL */

|

||||

exports.handler = function (event, context, callback) {

|

||||

const addressParam = event.queryStringParameters.url;

|

||||

|

||||

if (!addressParam) {

|

||||

callback(null, errorResponse('Address parameter is missing.'));

|

||||

return;

|

||||

}

|

||||

|

||||

const address = decodeURIComponent(addressParam)

|

||||

.replaceAll('https://', '')

|

||||

.replaceAll('http://', '');

|

||||

|

||||

dns.lookup(address, (err, ip, family) => {

|

||||

if (err) {

|

||||

callback(null, errorResponse(err.message));

|

||||

} else {

|

||||

callback(null, {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({ ip, family }),

|

||||

});

|

||||

}

|

||||

});

|

||||

};

|

||||

|

||||

const errorResponse = (message, statusCode = 444) => {

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ error: message }),

|

||||

};

|

||||

};

|

||||

114

api/firewall.js

@@ -1,114 +0,0 @@

|

||||

import axios from 'axios';

|

||||

import middleware from './_common/middleware.js';

|

||||

|

||||

const hasWaf = (waf) => {

|

||||

return {

|

||||

hasWaf: true, waf,

|

||||

}

|

||||

};

|

||||

|

||||

const firewallHandler = async (url) => {

|

||||

const fullUrl = url.startsWith('http') ? url : `http://${url}`;

|

||||

|

||||

try {

|

||||

const response = await axios.get(fullUrl);

|

||||

|

||||

const headers = response.headers;

|

||||

|

||||

if (headers['server'] && headers['server'].includes('cloudflare')) {

|

||||

return hasWaf('Cloudflare');

|

||||

}

|

||||

|

||||

if (headers['x-powered-by'] && headers['x-powered-by'].includes('AWS Lambda')) {

|

||||

return hasWaf('AWS WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('AkamaiGHost')) {

|

||||

return hasWaf('Akamai');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('Sucuri')) {

|

||||

return hasWaf('Sucuri');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('BarracudaWAF')) {

|

||||

return hasWaf('Barracuda WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && (headers['server'].includes('F5 BIG-IP') || headers['server'].includes('BIG-IP'))) {

|

||||

return hasWaf('F5 BIG-IP');

|

||||

}

|

||||

|

||||

if (headers['x-sucuri-id'] || headers['x-sucuri-cache']) {

|

||||

return hasWaf('Sucuri CloudProxy WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('FortiWeb')) {

|

||||

return hasWaf('Fortinet FortiWeb WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('Imperva')) {

|

||||

return hasWaf('Imperva SecureSphere WAF');

|

||||

}

|

||||

|

||||

if (headers['x-protected-by'] && headers['x-protected-by'].includes('Sqreen')) {

|

||||

return hasWaf('Sqreen');

|

||||

}

|

||||

|

||||

if (headers['x-waf-event-info']) {

|

||||

return hasWaf('Reblaze WAF');

|

||||

}

|

||||

|

||||

if (headers['set-cookie'] && headers['set-cookie'].includes('_citrix_ns_id')) {

|

||||

return hasWaf('Citrix NetScaler');

|

||||

}

|

||||

|

||||

if (headers['x-denied-reason'] || headers['x-wzws-requested-method']) {

|

||||

return hasWaf('WangZhanBao WAF');

|

||||

}

|

||||

|

||||

if (headers['x-webcoment']) {

|

||||

return hasWaf('Webcoment Firewall');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('Yundun')) {

|

||||

return hasWaf('Yundun WAF');

|

||||

}

|

||||

|

||||

if (headers['x-yd-waf-info'] || headers['x-yd-info']) {

|

||||

return hasWaf('Yundun WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('Safe3WAF')) {

|

||||

return hasWaf('Safe3 Web Application Firewall');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('NAXSI')) {

|

||||

return hasWaf('NAXSI WAF');

|

||||

}

|

||||

|

||||

if (headers['x-datapower-transactionid']) {

|

||||

return hasWaf('IBM WebSphere DataPower');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('QRATOR')) {

|

||||

return hasWaf('QRATOR WAF');

|

||||

}

|

||||

|

||||

if (headers['server'] && headers['server'].includes('ddos-guard')) {

|

||||

return hasWaf('DDoS-Guard WAF');

|

||||

}

|

||||

|

||||

return {

|

||||

hasWaf: false,

|

||||

}

|

||||

} catch (error) {

|

||||

return {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({ error: error.message }),

|

||||

};

|

||||

}

|

||||

};

|

||||

|

||||

export const handler = middleware(firewallHandler);

|

||||

export default handler;

|

||||

35

api/follow-redirects.js

Normal file

@@ -0,0 +1,35 @@

|

||||

exports.handler = async (event) => {

|

||||

const { url } = event.queryStringParameters;

|

||||

const redirects = [url];

|

||||

|

||||

try {

|

||||

const got = await import('got');

|

||||

await got.default(url, {

|

||||

followRedirect: true,

|

||||

maxRedirects: 12,

|

||||

hooks: {

|

||||

beforeRedirect: [

|

||||

(options, response) => {

|

||||

redirects.push(response.headers.location);

|

||||

},

|

||||

],

|

||||

},

|

||||

});

|

||||

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify({

|

||||

redirects: redirects,

|

||||

}),

|

||||

};

|

||||

} catch (error) {

|

||||

return errorResponse(`Error: ${error.message}`);

|

||||

}

|

||||

};

|

||||

|

||||

const errorResponse = (message, statusCode = 444) => {

|

||||

return {

|

||||

statusCode: statusCode,

|

||||

body: JSON.stringify({ error: message }),

|

||||

};

|

||||

};

|

||||

54

api/generate-har.js

Normal file

@@ -0,0 +1,54 @@

|

||||

const puppeteer = require('puppeteer-core');

|

||||

const chromium = require('chrome-aws-lambda');

|

||||

|

||||

exports.handler = async (event, context) => {

|

||||

let browser = null;

|

||||

let result = null;

|

||||

let code = 200;

|

||||

|

||||

try {

|

||||

const url = event.queryStringParameters.url;

|

||||

|

||||

browser = await chromium.puppeteer.launch({

|

||||

args: chromium.args,

|

||||

defaultViewport: chromium.defaultViewport,

|

||||

executablePath: await chromium.executablePath,

|

||||

headless: chromium.headless,

|

||||

});

|

||||

|

||||

const page = await browser.newPage();

|

||||

|

||||

const requests = [];

|

||||

|

||||

// Capture requests

|

||||

page.on('request', request => {

|

||||

requests.push({

|

||||

url: request.url(),

|

||||

method: request.method(),

|

||||

headers: request.headers(),

|

||||

});

|

||||

});

|

||||

|

||||

await page.goto(url, {

|

||||

waitUntil: 'networkidle0', // wait until all requests are finished

|

||||

});

|

||||

|

||||

result = requests;

|

||||

|

||||

} catch (error) {

|

||||

code = 500;

|

||||

result = {

|

||||

error: 'Failed to create HAR file',

|

||||

details: error.toString(),

|

||||

};

|

||||

} finally {

|

||||

if (browser !== null) {

|

||||

await browser.close();

|

||||

}

|

||||

}

|

||||

|

||||

return {

|

||||

statusCode: code,

|

||||

body: JSON.stringify(result),

|

||||

};

|

||||

};

|

||||

@@ -1,7 +1,14 @@

|

||||

import https from 'https';

|

||||

import middleware from './_common/middleware.js';

|

||||

const https = require('https');

|

||||

|

||||

const carbonHandler = async (url) => {

|

||||

exports.handler = async (event, context) => {

|

||||

const { url } = event.queryStringParameters;

|

||||

|

||||

if (!url) {

|

||||

return {

|

||||

statusCode: 400,

|

||||

body: JSON.stringify({ error: 'url query parameter is required' }),

|

||||

};

|

||||

}

|

||||

|

||||

// First, get the size of the website's HTML

|

||||

const getHtmlSize = (url) => new Promise((resolve, reject) => {

|

||||

@@ -42,11 +49,14 @@ const carbonHandler = async (url) => {

|

||||

}

|

||||

|

||||

carbonData.scanUrl = url;

|

||||

return carbonData;

|

||||

return {

|

||||

statusCode: 200,

|

||||

body: JSON.stringify(carbonData),

|

||||

};

|

||||

} catch (error) {

|

||||

throw new Error(`Error: ${error.message}`);

|

||||

return {

|

||||

statusCode: 500,

|

||||

body: JSON.stringify({ error: `Error: ${error.message}` }),

|

||||

};

|

||||

}

|

||||

};

|

||||

|

||||

export const handler = middleware(carbonHandler);

|

||||

export default handler;

|

||||

26

api/get-cookies.js

Normal file

@@ -0,0 +1,26 @@

|

||||

const axios = require('axios');

|

||||

|

||||

exports.handler = async function(event, context) {

|

||||

const { url } = event.queryStringParameters;

|

||||

|

||||

if (!url) {

|

||||

return {

|

||||

statusCode: 400,

|

||||